WWDC 2014: Advanced Graphics and Animations for iOS Apps

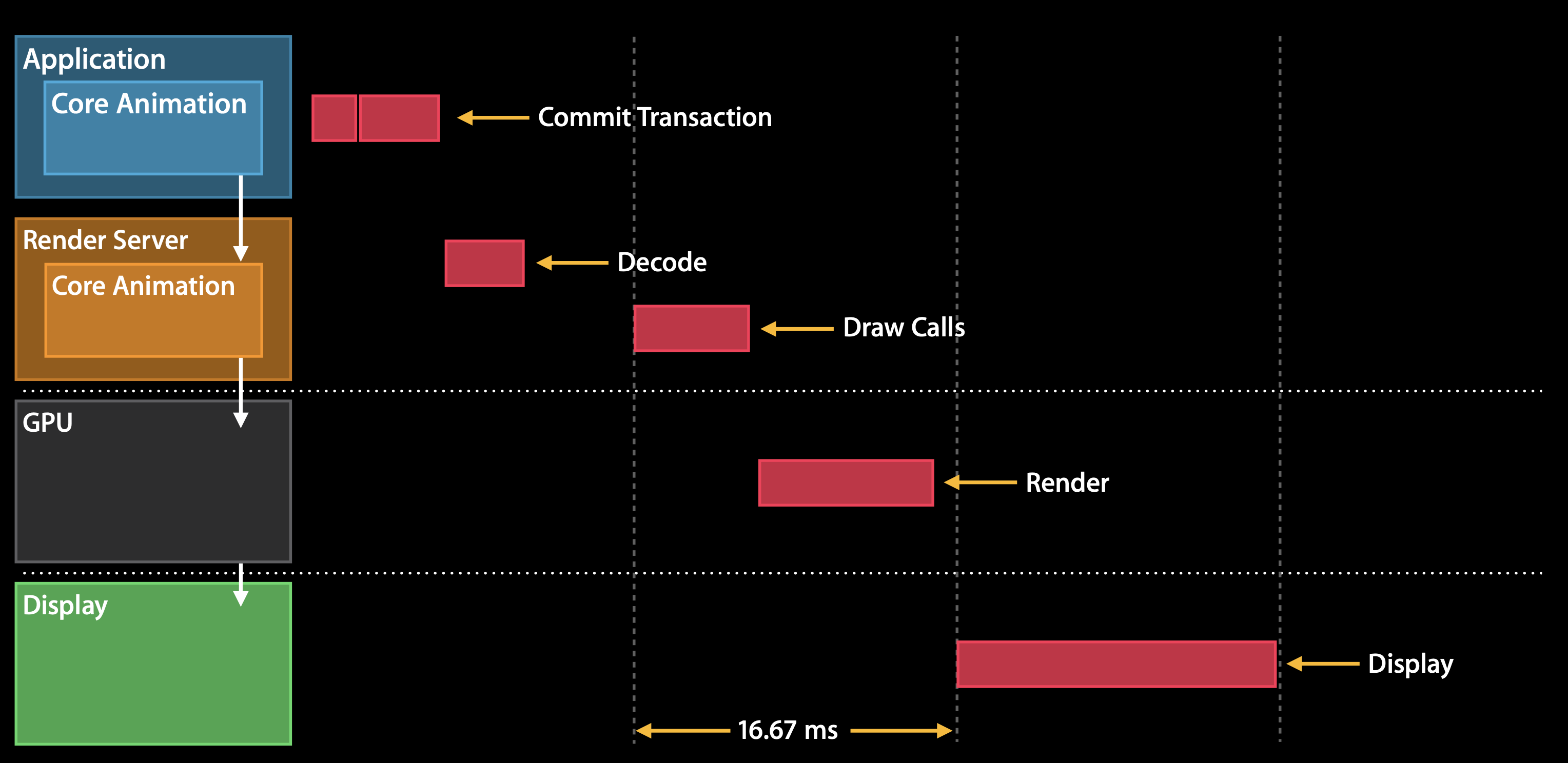

Core Animation Pipeline

Event handling phase - application builds a view hierarchy (either through UIKit or directly with Core Animation)

Commit transaction phase - view hierarchy is then committed to the render server over IPC (render server is "server-side" version of Core Animation)

View hierarchy is rendered using Metal/OpenGL on GPU

Note that render server has to wait for next vsync to get buffers from display to render to before issuing draw calls for the GPU (see the gap between Decode and Draw Calls in diagram below)

Commit transaction (which happens in your app) has 4 sub-phases:

- Layout - set up views

- Display - draw views

- Invoke

drawRect: - Usually CPU (i.e. Core Graphics) or memory bound

- Prepare - additional Core Animation work

- Image decoding (JPGs, PNGs) and image conversion (if in format not supported by GPU, e.g. index bitmap)

- Commit - serialize layers and send to render server

- Recursively iterates over entirely layer tree

- Expensive if layer tree is complex

- Performance tip: keep layer tree as flat as possible

Animations are processed in 3 stages (first 2 in your app, last step in render server):

- Create animation and update view hierarchy

- Prepare and commit animation

- Render each frame (render server)

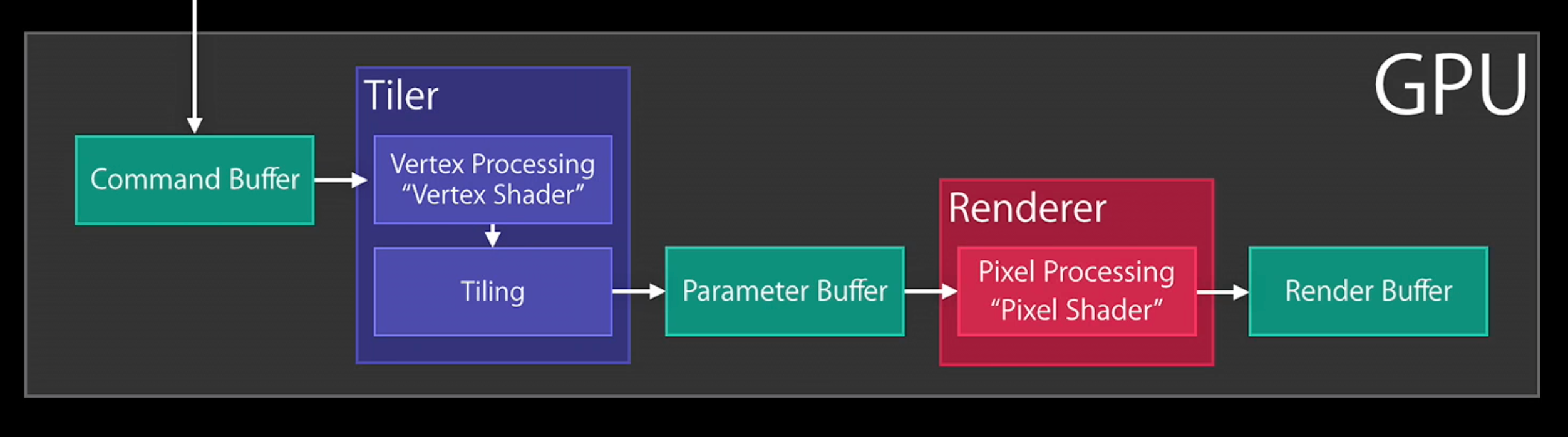

Rendering Concepts

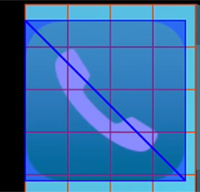

Tile-based rendering - screen is split into tiles of NxN pixels (each tile fits in SoC cache)

Geometry is split into tile buckets

Example: phone icon

- CALayer is spread across many tiles, and layer itself is split into two triangles

- Internally, GPU will further subdivide the triangles until each triangle fits in exactly one tile

Rasterization begins after all geometry is submitted

Render pass steps:

- Core Animation submits OpenGL/Metal calls to GPU command buffer

- Vertex processing (i.e. vertex shaders) transform all vertices into screen space

- Perform tiling (i.e. split geometry into tiles) and write tiled geometry to parameter buffer

- GPU waits until all geometry is processed or until parameter buffer is full

- Pixel processing (i.e. pixel shaders) and writes output to render buffer

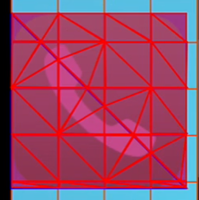

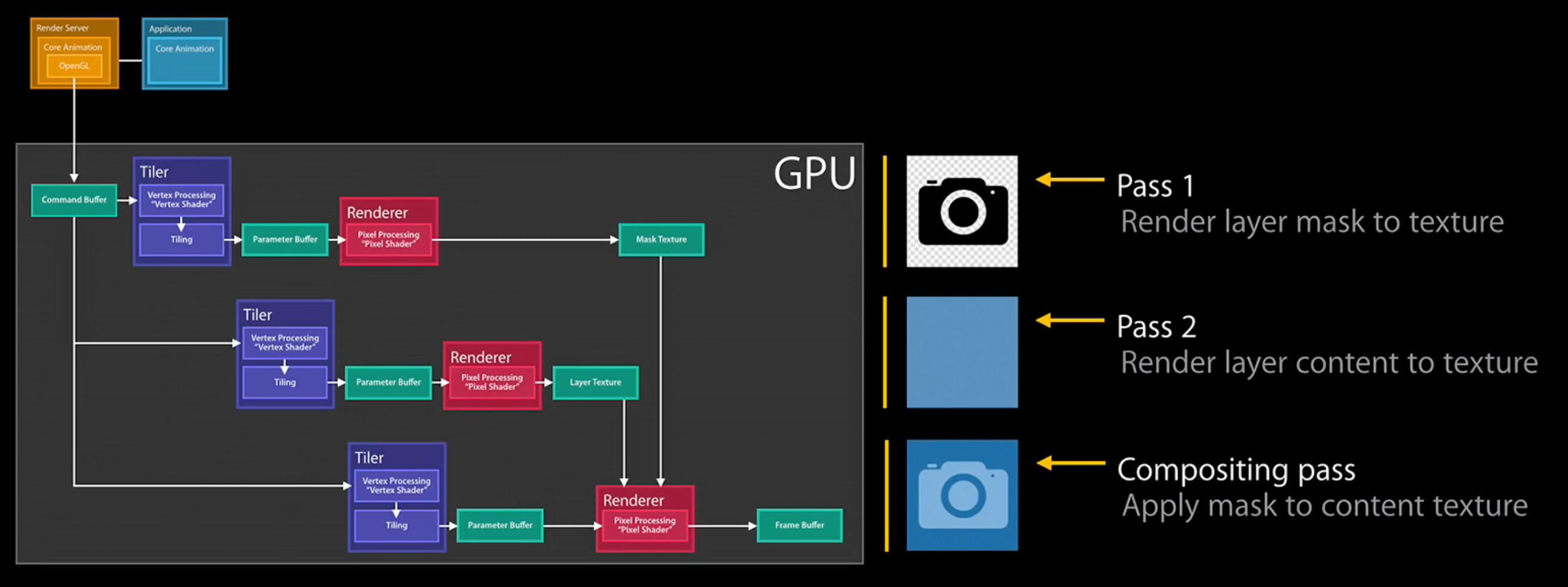

Example: Masking requires 3 render passes

Render pass 1: Render layer mask into texture

Render pass 2: Render layer content to texture

Render pass 3: Apply mask to content texture

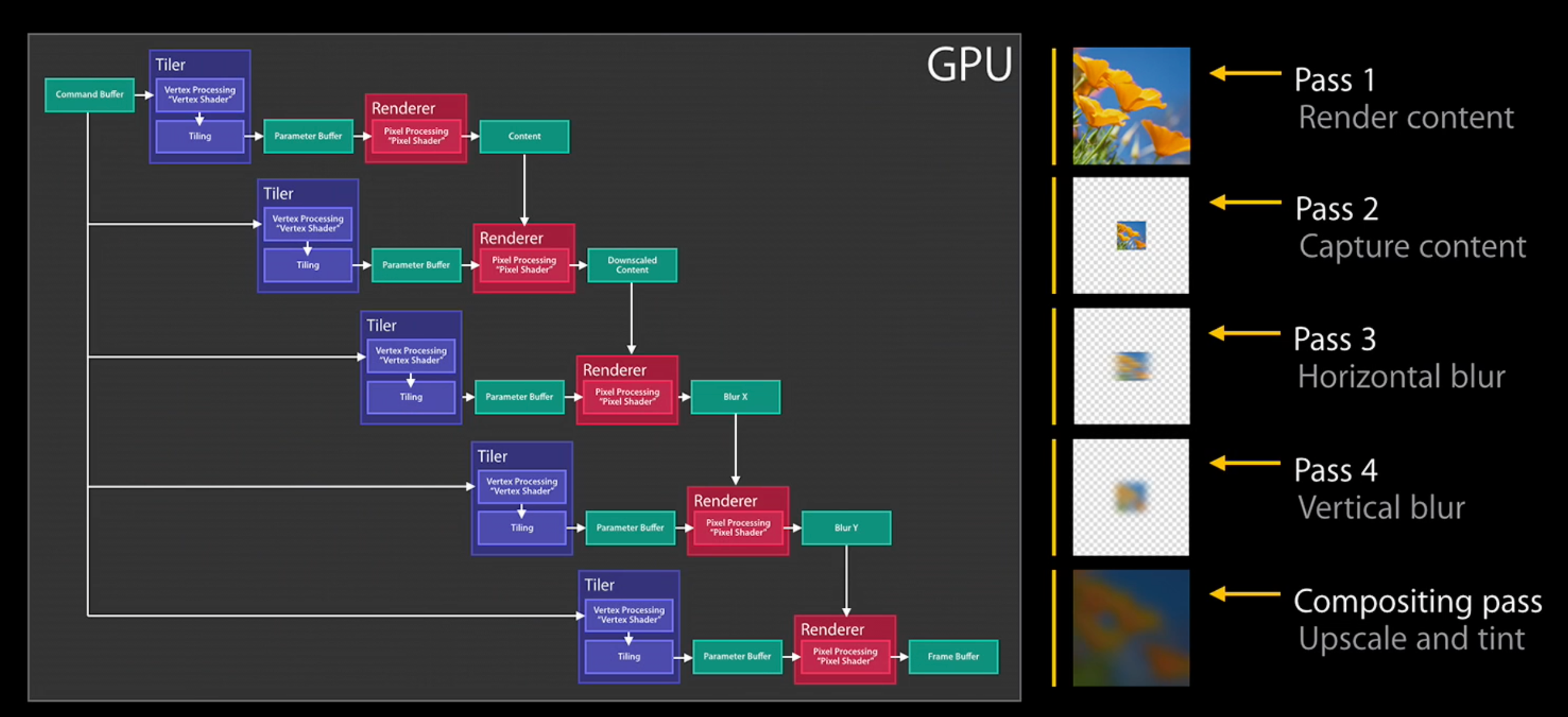

UIBlurEffect

UIBlurEffect requires 5 render passes (but exact number of render passes can depend on hardware model)

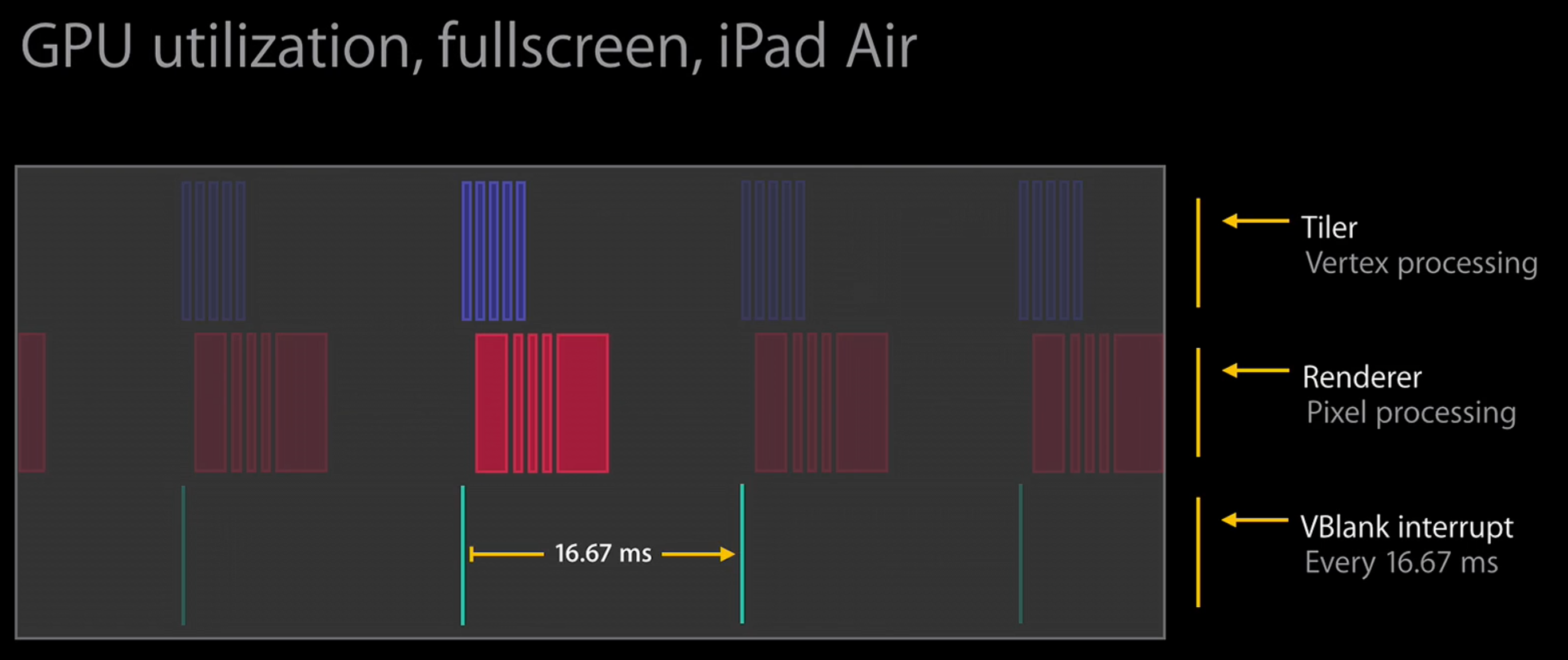

What does GPU utilization look like for the 5 render passes?

Note the 5 bars in each display frame that correspond to each render pass

Note that first tiler pass has to finish before first renderer stage can start

Note that 1st renderer pass is long (i.e. slow) because it actually renders the content

2nd pass (downscaling) is actually ~constant time

3rd and 4th pass (blurring) are fast since only applying to small area

Note the gaps in the renderer passes (highlighted in orange) - those are because GPU has to context switch:

UIVibrancyEffect

UIVibrancyEffect has 7 render passes: first 5 passes are same as UIBlurEffect, last 2 passes use the blurred texture

Note that more passes = more context switches = more idle time, which eats away at the 16.6ms we have to render the display frame

Performance Checklist

CPU or GPU bound?

- Generally want to perform rendering on GPU, but sometimes CPU rendering makes sense

- Use both OpenGL ES Driver instrument and Time Profiler instrument to help make tradeoff

Too many offscreen passes?

Use e.g. shadowPath to remove shadow pass

Too much blending?

Strange image formats or sizes?

Avoid on-the-fly conversions or resizing

Any expensive effects? (e.g. blur and vibrancy)

Anything unexpected in hierarchy? (e.g. accidentally leaving excessive views in the view hierarchy)

Use View Debugging feature in Xcode to carefully examine your view hierarchy and make sure it matches what you think it should be